By Robert Walker

CEO & Founder

Surveys & Forecasts, LLC

Norwalk, Connecticut

rww@safllc.com

Synthetic Data Use in Qualitative Research

I’ll admit to a flicker of doubt when I set out to unpack synthetic data for a qualitative audience. Could I condense this sprawling, algorithm-laden topic into a few pages and still keep it relevant and readable? Would I be asking seasoned moderators—already juggling focus groups and quick-turn quant surveys—to slog through jargon that might be found in a data-science journal? The risk of biting off more than I could chew felt real. Yet conversations with clients and colleagues kept circling the same questions: Can synthetic data ease recruiting headaches? Could it safeguard privacy without derailing insight? The curiosity is there, and the stakes are growing. So, with equal parts trepidation and conviction, I set out to translate the big ideas (no PhD required) into practical guidance that any qualitative researcher can weigh, test, and adapt.

Why Has Synthetic Data Become So Newsworthy?

Qualitative research thrives on conversation—a raised eyebrow, hand gestures, a sideways glance, a metaphor that helps a respondent finally feel understood. For years, we assumed those conversations would keep flowing without any change, as if people were an endless resource eager to answer yet another screener. Yet project timelines haven’t budged—clients still expect quality results against a backdrop of ever-shrinking timetables.

Our collective belief was also shaken in April 2025, when federal prosecutors charged eight sample industry executives with bilking clients out of $10 million in fabricated survey interviews—fake people, real invoices, shaken trust.¹ Against that backdrop, the 2024 GRIT Insights Practice Report² lists synthetic data (algorithm generated stand ins for real respondents) as one of the fastest rising methods researchers say they are testing right now. Against this backdrop, synthetic data is less a futuristic indulgence than a practical response to mounting constraints: scarce attention, damaged trust, and unyielding deadlines. It will not replace the craft of listening, but it may offer breathing room when traditional sampling alone cannot shoulder the load.

But what is synthetic data? Is it a cold replacement for truly live voices? Or is it a “safety net” of sorts, in which algorithms study the broad distributions of age, education, income, and behavior from a trusted source, and then weave a new, privacy safe fabric that follows the same contours but without copying a single thread? Used wisely, it might ease recruiting pressure, catch logic errors before fielding, or let teams share sensitive findings without exposing respondent identities. Then again, like anything that is used carelessly, it becomes just another shiny object.

This article explores a middle ground—how synthetic data can support the deep listening that defines qualitative research and insights.

What Synthetic Data Is—In Simple Terms

Picture a mapmaker tracing the outlines of a neighborhood: every street, park, and block is in the right place, but the people and license plates are left blank. Synthetic data works the same way. An algorithm that is used to generate synthetic data will study a genuine dataset—say, a national tracking study of 1,000 shoppers—then draws a fresh version that keeps the streets (i.e., the overall patterns) while leaving every resident (i.e., respondents) anonymous. In our neighborhood example, you can measure traffic flow, see where the cafés cluster, or even test a new bus route, yet no individual can be found or contacted. In a research context, you can capture stores shopped, the profile of brand buyers, brands bought on sale, the impact of coupons, advertising recall, and the intersection of all these variables. But isn’t synthetic data “fake” data? While synthetic data was not collected from live human beings, the short answer is no. We could break it down like this:

Synthetic data is algorithmically derived from human records and behaves as a reconstruction, not a forgery. The algorithm doesn’t lie about who it is; it openly creates new records that reflect the real sample’s shape without copying any one person.

Synthetic data is algorithmically derived from human records and behaves as a reconstruction, not a forgery. The algorithm doesn’t lie about who it is; it openly creates new records that reflect the real sample’s shape without copying any one person.

Fake respondents are stealth impostors—bots, dishonest panelists, or data from dishonest companies pretending to be or act as real records. Answers from fake respondents obey no statistical rule or pattern; most importantly, the fact that the data does not come from real respondents is not disclosed to the client or even the research firm.

How Do “Synthetic Personas” and “Synthetic Data” Differ?

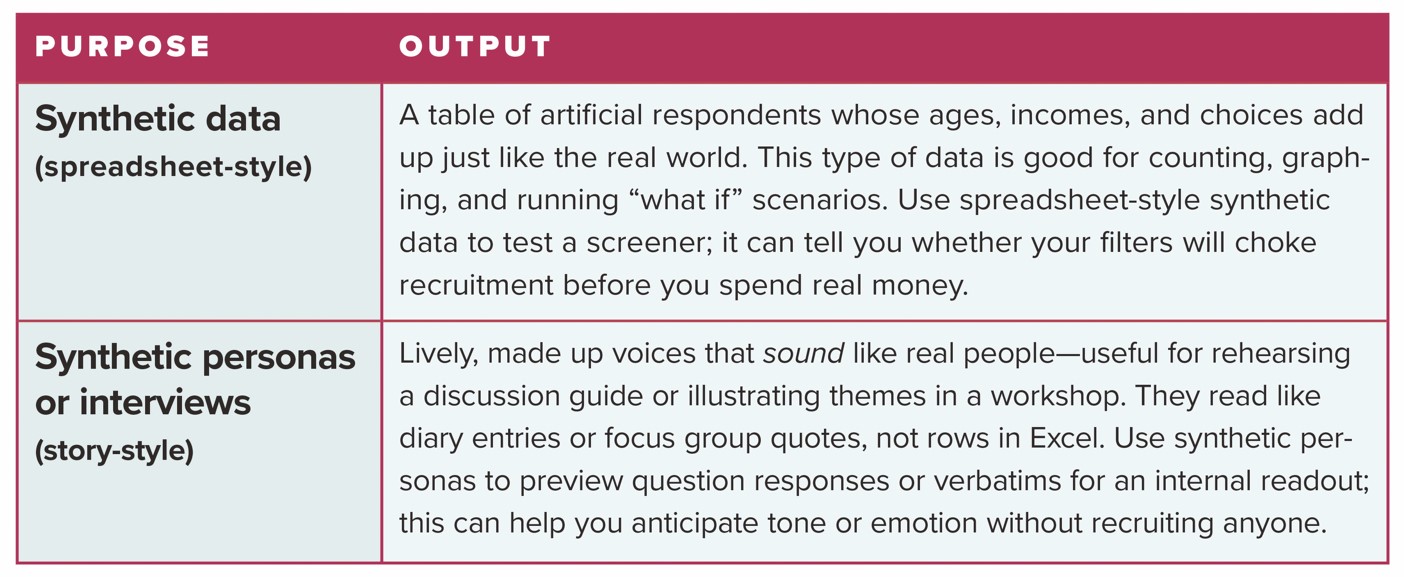

Before we get too lost in terminology, it helps to see how the two most common “synthetic” outputs actually serve different roles. The table below contrasts spreadsheet-style synthetic datasets—built for counting and cross-tabbing—with narrative-

style synthetic personas and interviews—built for tone, texture, and story rehearsal.

In effect, they are cousins, not twins. In short, synthetic data mirrors the crowd’s shape so you can run numbers, while synthetic personas mimic the crowd’s voice so you can fine tune your conversation. Spreadsheet-style synthetic data is designed for arithmetic: you can sort it, tally it, plot it, and run quick “what-if” totals without worrying about individual voices. Its main job is to tell you how many and how often. Synthetic personas or interviews, by contrast, are built for storytelling: they give you a sense of why—the motivations, objections, and language that make a concept feel real in a room. Each serves a different agenda or question, and together they round out the picture.

Integrating Synthetic Data with Primary and Secondary Sources

Synthetic data earns its keep only when it complements—not supplants—information gathered from real people or established archives. Below are three situations that illustrate how a carefully modeled synthetic file can extend the reach of other evidence while keeping methodological rigor intact.

Extending qualitative insights into provisional numbers. Suppose a project ends with 20 in depth interviews. Clear themes emerge, yet the client still needs an approximate sense of scale. By training a generator on the distributions observed in these interviews (e.g., age, category, brands, purchase cycle), a qualitative consultant can create several hundred statistically consistent records. The expanded file supports exploratory crosstabs (e.g., interest in a feature among lapsed versus loyal users) without claiming the precision of a full survey or adding fieldwork dollars. Again, we are using algorithmically generated records to explore what the possible outcomes might be, but not to replace actual interviews.

Testing new findings against licensed syndicated studies. Syndicated studies often have benchmarks. These often arrive as aggregate tables (i.e., the respondent level data is not available). A synthetic “twin” of last year’s benchmark—built to match the published totals and other known relationships—allows the consultant to overlay fresh primary data that may have been collected. The consultant can then judge whether attitudinal shifts seen in the fresh data are genuine or reflect sampling noise. Because the synthetic twin contains no proprietary rows, the consultant remains within the license while gaining practical probabilistic comparisons.

Bridging time gaps in datasets. A regional grocery chain is exploring same-day delivery outside its core market area. The most recent public data on household internet speed (a key predictor of online grocery adoption) comes from the 2022 American Community Survey (ACS). Rather than wait for the next release, the chain blends the 2022 ACS table with fresh county-level data (e.g., population density, new-home permits, broadband coverage) and asks a generator to create a 2025 synthetic update. The chain weighs this data and then conducts an online concept test on those synthetic proportions, helping the logistics team choose three pilot markets while the official figures are still a year away.

Across these applications, the priorities are clear: primary data establishes the “ground truth”; secondary or public data provides context. Then, synthetic data offers a provisional “scaffold” for decisions that cannot wait. But each step must be documented, labeled, and, if possible, validated by subsequent fieldwork.

Practical Ways Qualitative Consultants Can Put Synthetic Data to Work

Synthetic data serves best as a supporting actor—filling gaps while live voices remain central. Four applications illustrate how it adds value without overstating its role.

Refining screener logic before fieldwork begins. Complex recruit profiles often combine several ordinary filters—job role, location, usage frequency—into a rare combination that chokes incidence. By generating a modest synthetic sample that mirrors your expected audience, you can run the screener in advance, observe where eligibility collapses, and adjust wording or order before recruiters make a single call.

Testing the flow of a discussion guide. Even seasoned moderators occasionally overlook a question that falls flat. Posting a draft guide to a synthetic bulletin board group reveals where engagement flags and where follow up probes feel redundant. Small edits made at this stage can lift the energy of the eventual live session without adding recruits or extending deadlines.

Sharing narratives without revealing identities. Highlight reels and verbatim quotes enrich debriefs, yet privacy policies or NDAs sometimes restrict how widely these materials can circulate. Synthetic personas (see description above) crafted from the same thematic patterns, but containing no personal identifiers, allow consultants to illustrate findings in workshops and internal memos while safeguarding respondent confidentiality.

Across these uses, synthetic data acts as a complement: it reduces rework, clarifies next steps, and protects privacy, all while leaving the essential business of listening to real people firmly in place.

Knowing the Boundaries—Using Synthetic Data Wisely

Synthetic data is a helpful assistant, but it is only as strong as the seed you plant. What is the seed? Think of it like a sourdough starter: it might be a small pilot survey or a set of target percentages that you supply as a table, such as in Excel. The generator studies this “starter batch”—the interaction of age, education, income, and behaviors—and then “bakes” new rows that follow the same patterns. If the seed is balanced, the synthetic file will be balanced. If key voices are missing, their absence will echo through every synthetic record. Note that:

- Synthetic data copies ingredients, not people. The model reproduces patterns—older adults tending to earn more, heavier users clustering in certain regions—without repeating any single respondent. A good seed produces a good echo; a weak seed produces a weak echo.

- Patterns first, surprises by choice. The synthetic file mirrors the seed by default, but you can introduce intentional outliers—either by adding edge-case records to the seed or adjusting model settings—when you need to stress-test an analysis or explore extreme scenarios. Just label them clearly so their role is transparent.

- Run a sanity check. If you are working with many records, open the file and crosstab one or two key variables, i.e., income by brand use, age by brand loyalty, etc. If anything looks off (e.g., you know that brand use increases with income, but the data doesn’t reflect this), then revisit the seed or the model settings.

- Label the synthetic parts. Whenever you share a table or a quote generated by a model, always tag it clearly—“synthetic estimate,” “illustrative quote,” or similar. Clients can then weigh the impact of synthetic records on the business decision appropriately.

Synthetic data toolmakers are steadily adding bias checks and privacy safeguards, but the fundamentals remain: start with a thoughtful seed, label outputs clearly, confirm privacy, and sanity-check the results. Follow these steps, and synthetic data becomes a reliable helper—extending your reach without crowding out the live human voices at the heart of qualitative insight.

A Beginner’s Playbook—Trying Synthetic Data on a Modest Budget

A Beginner’s Playbook—Trying Synthetic Data on a Modest Budget

You do not need a data-science degree or a six-figure platform to run a small synthetic pilot. A laptop, a clean seed file, and an hour of focused time will show you what the method can (and cannot) do for your practice.

Choose a low-risk project. Pick a study where a few hundred extra rows would clarify direction but not drive the final decision—perhaps last quarter’s pilot survey or a set of demographic quotas you struggled to fill. This file becomes your seed.

Select a tool that matches your comfort level. Several vendors—Gretel, MOSTLY AI, and others—offer no-cost trial tiers. Upload your seed, choose “tabular,” and follow the on-screen wizard. For those of you already versed in ChatGPT, you can train your own personas to mimic real-world segments and for each one to react to different stimuli. For the more adventurous, you can even install a synthetic data generator on your own machine.

Generate the synthetic rows. Most tools default to creating the same number of rows as your seed; you can adjust that to any target. The generator recreates the overall shape—age, education, income, and their relationships—without copying real records.

Share, gather feedback, and iterate. Send the draft to a trusted colleague or client champion. Ask: Does this help us answer the next question? Adjust the seed or settings as needed and rerun—each cycle takes minutes, not days.

Within a single afternoon, you will know whether synthetic data lightens your recruitment load, sharpens your screeners, or simply clarifies what live work still must be done. The cost is really minimal, the risk is low, and the learning curve is gentle—an accessible first step before you consider larger, paid solutions.

Synthetic Data: Perhaps a Friend and a Foe?

Synthetic data is neither savior nor saboteur; it is a power tool. My advice to qualitative researchers: keep the human voice at the center, but become a power user. Try to understand it and learn how to use it. In steady hands, it completes incomplete cells, helps anticipate answers to tough questions, and lets teams share insights without risking privacy. In careless hands, it can amplify blind spots or slide into overconfidence. The difference is not in the algorithm but in the craft of the researcher who wields it. Use the model to smooth recruiting, preview guides, and sanity-check those early themes. Our research field advances not simply when new tools appear, but when curious and smart practitioners test them, refine them, and—most importantly—keep the insights human!

For qualitative consultants, a good rule of thumb is: Let synthetic data do the heavy lifting so live conversations can do the delicate listening.

Resources

- U.S. Department of Justice Lawsuit, April 2025: www.justice.gov/usao-nh/pr/eight-defendants-indicted-international-conspiracy-bill-10-million-fraudulent-market

- 2024 GRIT study: https://greenbook.org/grit/grit-business-and-innovation-edition