By Lauren McCluskey

Principal

Responsive Research, Inc.

Tarzana, California

lauren@responsive.rocks

CLICK HERE for Spanish translation by Pablo Gutierrez

CLICK HERE for Spanish translation by Pablo Gutierrez

LEXIA Insights

Mexico City, Mexico

pablo.gutierrez@lexia.com.mx

A Pivotal Moment in My AI Moderation Journey

A Pivotal Moment in My AI Moderation Journey

It was a bluebird day on the mountain, the kind of crisp, sun-drenched afternoon in Utah that makes you forget about your inbox for a few hours. But I won’t forget that moment on the chairlift in March, relishing the rare silence between runs, when I got the email. It was from a new potential client I respect—an innovation-driven global insights team. The subject line seemed normal enough, but then, there it was . . . “~75–100 IHUTs with 45-minute AI-driven IDIs.” Just like that, AI moderation wasn’t theoretical anymore; it was on my desk.

I re-read the bid request a couple of times. The question stopped me cold. Are they really asking me for AI-led IDIs?

After the initial shock, the emotion that hit me first was fear—a wave of it. Was this the beginning of the end of human moderators? Was my craft—the one I’d spent years honing—about to be replaced by a bot that never needed coffee or calendar coordination?

But right on the heels of fear came curiosity—bright and undeniable. I knew I couldn’t just ignore this. If clients were already asking about AI moderation, then I needed to get educated on it. Not eventually. Not hypothetically. Now.

That email marked the beginning of my deep dive into AI moderation. What I discovered along the way changed the way I think about my role as a qualitative researcher—not as someone being replaced, but as a practitioner being redefined.

DIVING IN: FROM FEAR TO CURIOSITY TO CONFIDENCE

Back at the condo, boots off and post-jacuzzi soak, I dove in. I started by scheduling demos with several AI moderation platforms. I had candid conversations with colleagues in the QRCA community who I knew were already exploring AI tools. But those conversations confirmed something I’d suspected: not a single colleague had been approached by a client with the AI moderation request.

It was clear that very few have been experimenting with conversational AI at the fieldwork stage. That’s when I realized the RFP was a gift, an invitation to upskill. My growth mindset kicked in.

The more I explored, the more the initial fear gave way to something far more useful: confidence. Not because I had all the answers, but because I had the framework and curiosity to start asking the right questions.

Sorting Hype from Help: Where AI Moderation Can Add Value

One of the first realizations I had was that not all AI moderation is created equal—and not all research contexts are appropriate for it.

AI moderation can be well-suited to structured environments. If the discussion guide is tight, the objectives are clear, and the emotional stakes are low, AI moderation can be a helpful tool. Think: early-stage exploratory interviews, post-use product testing, or diary studies with large sample sizes. In these scenarios, AI can efficiently probe for clarification and surface early themes.

It’s also an effective tool for scaling insight, especially when timelines are short and budgets are tight. A 10-minute AI-moderated check-in with 50 participants can reveal surface-level trends that steer researchers toward topics that deserve a deeper dive. In some cases, AI moderation might be useful within a hybrid model: AI moderating “agents” can conduct a baseline diary study, and a human moderator follows up with a tailored deep-dive interview—informed by the patterns AI helped surface.

But as I identified some viable use cases, I began to see the gaps just as clearly.

WHERE AI FALLS SHORT AND HUMANS MUST LEAD

The more complex the human dynamics, the more essential the human moderator becomes.

AI can’t yet read the room—and certainly not the subtle, textured room that exists inside a participant’s story. It can’t pick up on a participant’s pause when asked about a recent loss. It doesn’t know when to go off-script because someone just said something that doesn’t quite line up. It can’t sense the difference between compliance and conviction. It doesn’t know when not to ask a question.

As qualitative researchers, we’ve trained our ears, eyes, and instincts to tune into subtext, body language, energy shifts, and silence. These are the very spaces where the richest insight lives—and where AI, at least for now, cannot tread. In fact, my AI moderation rabbit hole reminded me of what my moderating superpower is: my intuition and my fluidity. I’m probably one of the least “rigid” moderators in the industry—zigging and zagging to get to the client’s objective . . . and I almost always go “off-guide” to get there. No AI moderator I’ve seen can compete with that!

This is especially true in industries like women’s health and wellness, travel, fashion, and tech—where identity, aspiration, and emotion are deeply intertwined. When someone is speaking about fertility treatments, self-expression through clothing, or how they feel about an AI therapist, those conversations require emotional safety, cultural fluency, and real-time human discernment.

Choosing Client-Centric Solutions Over Hype

So, what happened with that project request? After all the demos and conversations, I submitted my proposal—and I didn’t recommend AI moderation for that initiative. Those IHUTs required direct observation—watching participants prepare, manipulate, and cook with various ingredients, and not a single AI moderation platform was video-enabled. Video was by far the most essential component this project required, and that superseded (at least in my mind) the client’s desire to have the interviews be AI-led.

That, I realized, was the true lesson: It’s not about choosing sides. It’s not about AI versus human moderation. It’s about choosing what best serves the client, the participant, and the insight. It always has been, and it always will be.

As qualitative researchers, we aren’t just moderators. We’re designers of understanding. We architect the emotional space where people feel safe enough to reveal what really matters. This role is still deeply human. But now, we have new tools to help us do it more efficiently—and sometimes at greater scale.

Our Evolving Role: From Moderators to Meaning Makers

What this journey taught me is that our value as moderators is evolving—not eroding.

In the age of conversational AI, the most valuable moderators will be those who start to embrace AI now.

If we want to thrive in this next chapter, we need to replace resistance with intentional adoption. If you’re a fellow moderator wondering where to start, here are two practical ways to explore AI as a co-moderator, not a competitive threat.

Hybrid Use Case 1: Human Starts, AI Finishes

Consider this for high-volume, structured sessions. I’d love to conduct the first 20 interviews myself, then unleash an AI moderator agent (that I trained) to complete the remaining 50–80 sessions. We’ve all been in those scenarios when clients want to conduct an insane number of IDIs. Or when a client feels the need to do a qual-quant study. I’m excited to find a project to pilot wherein my digital twin moderator with Lauren’s moderating superpowers can capably conduct the bulk of the sessions. I can observe and fine-tune as needed, and we can collaborate on the analysis.

Hybrid Use Case 2: AI Starts, Human Finishes

I’d love to use AI for early diary tasks or warm-up interviews. Then I can step in for the nuanced human exploration. In essence, let AI moderate light-touch pre-tasks or diary studies, giving us a head start on context. We then take over when it’s time to dig deeper, challenge assumptions, and hold space for ambiguity.

These are just two of many possible hybrid scenarios where we partner with AI to deliver speed and scale for clients who require it.

A Human-AI Code of Collaboration and Maybe Even “Co-Moderation”

We are not obsolete—unless we stop evolving. Moderators don’t need to become technologists, though being comfortable with evolving tech is certainly a benefit. We need to become translators, synthesizers, and emotional anchors in a world awash with synthetic voices. This road ahead isn’t about replacing what makes us great—it’s about reclaiming it and amplifying it, with AI by our side, not in our seat.

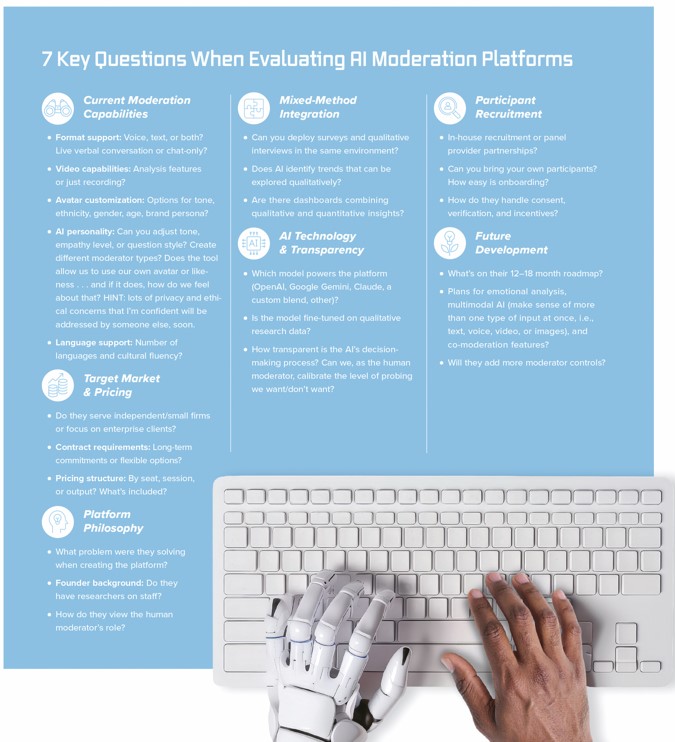

Through trial and error, I developed a list of questions to help other researchers assess whether an AI platform aligns with their needs.

These questions help qualitative researchers distinguish between true research partners and basic automation tools. The right AI platform should respect our craft, offer flexible pricing for independent or smaller teams, integrate seamlessly with your methods, provide transparent technology, simplify participant management, and demonstrate commitment to evolving with researchers’ needs. By evaluating these areas, you can find a platform that’s right for you.

Methodology Innovation and Experimentation

Moderators who thrive will not just employ our traditional tried and tried-and-true methods. We’ll reinvent and remix them. We will need to design hybrid methods that blend mobile ethnography, sensory exercises, live sessions, and asynchronous diary probes in new ways. This creativity is where humans shine: designing the research “stage” for insight theater. AI can help us execute, but thankfully, it still needs human architects to invent and orchestrate the experience.

The future favors moderators who invest in hybrid research designs, immersive methods, and multisensory experiences. We’ll need to learn new tools—but more importantly, we’ll need to embrace experimentation as part of our “brand” as moderators.

Final Takeaway: Stay Curious

Final Takeaway: Stay Curious

That email on the ski slope jolted me. But it also did something else: it reminded me that curiosity is more powerful than fear. We’re living through a seismic shift in how research is designed, moderated, and delivered. But rather than retreating, we have the chance to lead—by helping clients navigate what’s possible, what’s useful, and what’s humanly irreplaceable.

AI is here to stay. Not as a threat, but as a tool. And we? We’re more valuable than ever, as long as we stay human, stay curious, and stay committed to meaning.